FPGA for AI (Artificial Intelligence): Ultimate Guide

27/03/2025, hardwarebee

Artificial Intelligence has rapidly become the heartbeat of modern technology, yet the quest for more efficient hardware never ceases. Field Programmable Gate Arrays (FPGAs) are emerging as a game-changer in this arena, offering unparalleled benefits that meet the growing demands of AI applications. These versatile processors, known for their flexibility and efficiency, are increasingly being used to optimize AI workloads, making them a hot topic in tech circles.

FPGAs stand out due to their remarkable ability to be programmed for specific tasks after manufacturing, allowing for greater adaptability compared to traditional processors. This has made them particularly attractive in AI development, where the need for high performance, energy efficiency, and real-time processing is paramount. As AI models grow more complex, the reconfigurable nature of FPGAs allows developers to fine-tune performance without compromising on power consumption or speed.

This ultimate guide delves deep into the world of FPGAs and their intersection with AI, exploring their advantages, comparing them with CPUs and GPUs, and revealing how they can be integrated into AI workloads. From practical implementation strategies to future trends and industry-specific applications, this comprehensive guide is designed to equip you with the knowledge you need to leverage FPGAs effectively in your AI projects.

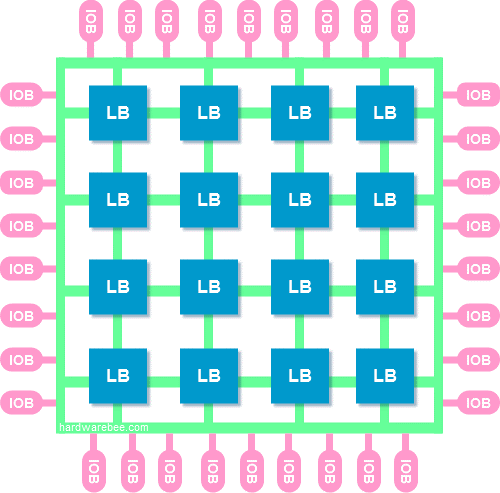

Understanding FPGAs: An Overview

Understanding Field-Programmable Gate Arrays (FPGAs) offers insight into their impactful role in AI and related technologies. FPGAs are versatile hardware designs used to boost performance across a wide range of applications. Unlike standard hardware, they utilize programmable logic and configurable logic blocks. This allows FPGAs to be tailored to specific tasks.

Key Features of FPGAs:

- Real-Time Processing: Essential for autonomous vehicles and image recognition.

- Low Power Consumption: Provides power efficiency compared to Central Processing Units (CPUs) and Graphics Processing Units (GPUs).

- Flexibility: Enables quick adaptation for different neural network inference tasks.

- Development Tools: Simplify the transition from concept to hardware implementation.

FPGAs excel in Digital Signal Processing (DSP), where real-time applications rely on their computational power. These devices are instrumental in deep learning applications, where convolution layers benefit from FPGA’s parallel processing capabilities.

FPGAs provide an edge in a domain where development time and adaptability are key, making them a strong competitor in the field of AI.

Key Advantages of Using FPGAs in AI

Field-Programmable Gate Arrays (FPGAs) have carved out an essential position in the realm of artificial intelligence (AI). These adaptable hardware devices are known for their ability to boost performance across diverse AI applications. FPGAs distinguish themselves with unique capabilities that enhance the sophistication and efficiency of neural network operations. They offer notable advantages, including flexibility, energy efficiency, and reduced latency, which are critical for real-time processing in AI-driven tasks. Their individualized hardware designs cater to specific requirements, promoting innovation and development in AI technologies. In a competitive field, FPGAs’ compatibility with deep learning applications and convolution operations elevates them as a viable option.

Flexibility and Reconfigurability

FPGAs are recognized for their unparalleled flexibility and reconfigurability in AI applications. These devices allow for customizable hardware designs due to their use of programmable logic and configurable logic blocks. Users can tailor FPGAs to suit specific neural network inference tasks, enhancing their adaptability. This design flexibility supports a wide range of applications, as FPGAs can efficiently switch between tasks without requiring extensive redesign. Development tools further streamline this process, facilitating quick transitions from concept to hardware implementation. As AI evolves, the ability to reconfigure FPGAs ensures that they remain relevant, encouraging continuous innovation and allowing developers to respond swiftly to technological advancements.

Energy Efficiency and Power Management

Power consumption is a significant consideration in AI development, and FPGAs offer marked benefits in this area. They deliver superior power efficiency over traditional Central Processing Units (CPUs) and Graphics Processing Units (GPUs), minimizing energy usage without sacrificing computational power. This advantage makes FPGAs ideal for applications where power management is important, such as in automotive or portable devices. Their energy-efficient operation supports sustainable AI development, critical in an increasingly environmentally conscious world. By reducing energy demands, FPGAs help maintain power budgets, allowing more resources to be allocated to complex AI tasks. This balance of performance and energy savings highlights FPGAs as a compelling choice for modern AI solutions.

Reduced Latency and Real-Time Processing

Real-time processing is a cornerstone of numerous AI applications, including autonomous vehicles and image processing. FPGAs vastly reduce latency due to their parallel processing capabilities, essential for tasks demanding immediate feedback and responses. With their ability to handle multiple operations simultaneously, FPGAs excel in Digital Signal Processing, ensuring smooth and prompt execution of tasks. This reduced latency is particularly advantageous in edge computing, where data needs to be processed locally and rapidly. In AI contexts, quick decision-making is often critical, whether for navigation systems in self-driving cars or real-time video analysis. FPGAs provide the computational power and rapid response necessary for such applications, positioning them as integral components in real-time AI systems.

Comparing FPGAs with CPUs and GPUs

FPGAs, or Field-Programmable Gate Arrays, are known for their ability to be programmed after manufacturing. They are increasingly used in artificial intelligence (AI) applications. These devices provide a unique advantage over traditional CPUs and GPUs. Unlike CPUs, which are designed for general-purpose tasks, and GPUs, which excel at parallel processing, FPGAs offer a reconfigurable platform. This flexibility makes them ideal for tasks that require specific hardware optimization, like neural network inference and real-time processing, including image and signal processing. As industries seek more efficient solutions, understanding the strengths and limitations of each technology becomes critical. This section will explore how FPGAs compare to CPUs and GPUs, focusing on their configurations and performance metrics.

Reconfigurability vs. General Purpose Processing

Reconfigurability is at the core of FPGA benefits. These devices use programmable logic and configurable logic blocks, allowing them to be customized for specific tasks. This means FPGAs can adapt to a wide range of applications, from autonomous vehicles to image recognition. In contrast, CPUs and GPUs are built for general-purpose processing. CPUs handle diverse tasks in sequence, while GPUs handle parallel tasks, making them suitable for workloads like deep learning applications. However, when it comes to hardware implementation and hardware designs tailored for specific tasks, FPGAs have the edge. Their ability to be reconfigured supports faster development times, as developers can tailor hardware resources to specific needs. This flexibility makes FPGAs attractive in environments needing quick adaptability and optimization.

Performance and Efficiency Metrics

When discussing performance and efficiency, FPGAs offer distinct advantages. Traditional processors, like CPUs and GPUs, are fixed in their architecture. FPGAs, however, can be configured to improve computational power and power efficiency tailored to specific applications. This is particularly beneficial for real-time applications that demand high processing speeds and low power consumption. Unlike the power-hungry GPUs, FPGAs can reduce energy use while maintaining performance, especially in tasks like convolution operations and digital signal processing. In automotive applications, where real-time processing is key, FPGAs provide the computational power needed with enhanced power efficiency. By optimizing hardware resources for specific tasks, FPGAs achieve impressive results in performance metrics. This tailored efficiency makes them a compelling choice where both speed and conservation are required.

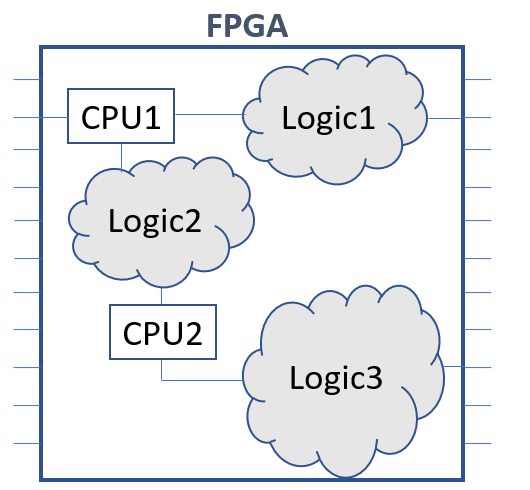

FPGA Integration for AI Workloads

Field-programmable gate arrays (FPGAs) offer unique advantages for AI workloads. Unlike traditional hardware like central processing units (CPUs) or graphics processing units (GPUs), FPGAs can be programmed to meet specific needs. This makes them adaptable for complex tasks such as neural network inference and deep learning applications. They provide higher power efficiency and real-time processing capabilities, important for a wide range of applications from image recognition to autonomous vehicles. Additionally, FPGAs’ customizable nature allows for optimized hardware designs that cater to real-time applications with stringent performance and power consumption requirements. With the help of programmable logic and development tools, FPGAs can be tailored to carry out specific computations efficiently, significantly reducing development time. This flexibility supports various AI tasks, making FPGAs a popular choice in the realm of artificial intelligence.

Convolutional Neural Networks (CNNs)

Convolutional Neural Networks (CNNs) are essential in AI, particularly for image processing tasks. They use convolution layers to detect patterns in images, making them adept at tasks like image recognition. FPGAs are excellent for CNNs because they allow for real-time processing. The configurable logic blocks of an FPGA can be optimized for convolution operations, improving computational power while maintaining power efficiency. This makes FPGAs more attractive than traditional hardware for CNN applications. Additionally, their ability to handle a range of applications from simple to complex helps accelerate the deployment of AI models in real-world scenarios.

Recurrent Neural Networks (RNNs)

Recurrent Neural Networks (RNNs) are designed to handle sequential data. Applications like automated text generation or speech recognition rely on RNNs for accurate results. FPGAs enhance RNN performance due to their adaptable architecture. The programmable gate arrays can be adjusted to optimize the sequential operations required by RNNs, reducing the computational effort often associated with such tasks. The hardware resources of an FPGA can be allocated efficiently, providing the necessary throughput for real-time applications. With development tools available, programmers can create bespoke solutions that perfectly align with the demands of RNNs, thus speeding up the processing of extensive datasets.

Transformers and Autoencoders

Transformers and autoencoders play critical roles in AI learning models, particularly in transforming and reconstructing data. Transformers excel in tasks involving language models, while autoencoders focus on compressing data and reducing noise. Incorporating FPGAs for these networks ensures high performance through their configurable logic. Hardware implementation on FPGAs allows for speedier execution of transformer model layers which can be computationally intensive. Similarly, for autoencoders, FPGAs provide the necessary power to manage large volumes of data throughput with minimal latency. By making real-time data processing feasible, the advantages of FPGAs unfold in applications requiring quick response times and high precision. This ability to customize and optimize both transformers and autoencoders makes FPGAs a valuable asset in AI technology advancements.

Techniques for Optimizing FPGA in AI

Field-programmable gate arrays (FPGAs) are becoming a popular tool for optimizing artificial intelligence (AI) applications. They offer a unique set of advantages like flexibility and power efficiency. Unlike traditional central processing units (CPUs) or graphics processing units (GPUs), FPGAs provide the versatility needed for customized hardware implementations. This makes them ideal for real-time applications such as image processing, digital signal processing, and autonomous vehicles. By using FPGAs, companies can tailor neural networks to specific needs while also reducing power consumption. These devices allow for rapid development and real-time processing, enabling a wide range of applications in sectors like automotive and deep learning. The flexibility and configurability of FPGAs offer developers the opportunity to create applications with increased computational power without sacrificing energy efficiency. In this way, optimizing FPGAs for AI tasks not only enhances performance but also contributes to more sustainable technology solutions.

Model Simplification

Model simplification is a key technique in optimizing FPGAs for AI. Simplifying complex neural networks can enhance the efficiency of programmable gate arrays. By reducing the number of parameters, FPGAs require less computation and power. This leads to faster processing and decreases in power consumption, benefiting a wide range of applications. For example, image recognition systems can be simplified to use fewer layers in convolution operations, speeding up real-time processing. Simplification can also involve pruning connections or using lower-precision data types. Reducing complexity helps in quick deployment and minimizes development time for AI applications. This approach is particularly useful for edge devices where computational power and battery life are limited. The use of simplified models on FPGAs can result in efficient hardware implementations that remain effective. This not only makes the technology more accessible but also enhances its range of applications.

Architecture Customization

Architecture customization allows FPGAs to meet specific needs in AI applications. Unlike fixed hardware designs in CPUs or GPUs, FPGAs offer programmable logic, which enables customization. This flexibility means developers can create specific architectures that better suit their neural networks. Customization can improve efficiency by tailoring hardware resources to the application. This is key for tasks demanding high computational power, such as image processing or real-time processing in autonomous vehicles. Configurable logic blocks in FPGAs enable precise adjustments to the architecture. These adjustments can lead to improved performance in deep learning applications. The ability to customize the architecture ensures the FPGA is not just a one-size-fits-all solution. Applications can be optimized with specific hardware requirements, enhancing both speed and efficiency. As a result, architeture customization in FPGAs might be one of the most promising avenues for advancing AI technologies, ensuring they remain versatile and adaptable for future developments.

Deployment of FPGAs in AI at the Edge

Field-programmable gate arrays (FPGAs) are revolutionizing how AI is deployed at the edge. These devices are ideal for neural network tasks due to their configurable logic blocks. FPGAs offer a wide range of uses, especially in real-time applications where speed and power efficiency are important. By allowing for custom hardware designs, they provide a high degree of flexibility. Because of their low power consumption, FPGAs are well-suited for edge environments. They can serve in numerous applications, from image recognition to autonomous vehicles. Their ability to handle complex computations directly on hardware makes them a preferred choice over graphics processing units (GPUs) and central processing units (CPUs).

Challenges and Solutions for Edge AI

Deploying AI at the edge presents several challenges, particularly concerning computational power and power efficiency. FPGAs address these issues by providing a balance between speed and energy use. They handle tasks like neural network inference more efficiently than traditional hardware. However, some challenges still remain, such as optimizing hardware resources. To tackle this, development tools and frameworks have been introduced. These tools simplify the FPGA programming process, making it easier to tailor hardware designs for specific applications. Another solution includes using convolution operations in digital signal processing to improve real-time processing and performance.

Use Cases in Real-Time Applications

FPGAs excel in real-time applications due to their ability to process data instantly and with high precision. One key area is image processing, where FPGAs handle tasks like image recognition rapidly and accurately. They are also key in automotive applications, powering features such as autonomous driving. In these environments, real-time data processing is vital, and FPGAs deliver with minimal latency. FPGAs also support deep learning applications by efficiently handling convolution layers. Their versatility in hardware implementation and capability to adapt to various tasks make them integral in a range of sectors, ensuring that real-time applications run smoothly and effectively.

Industry-Specific Applications of FPGA in AI

Field-Programmable Gate Arrays (FPGAs) are gaining traction across various industries thanks to their flexibility and efficiency. These programmable gate arrays stand out due to their ability to handle a wide range of applications, including deep learning and neural network processing. Unlike traditional central processing units (CPUs) or graphics processing units (GPUs), FPGAs offer real-time processing capabilities ideal for neural network inference. Their configurable logic blocks make them suitable for designing hardware specific to individual tasks. This allows industries to optimize power consumption and boost computational power for various real-time applications.

Healthcare Innovations

In healthcare, FPGAs are leading to significant advancements in both diagnosis and treatment. Their real-time processing abilities allow for quick decision-making, which is important in critical situations. For instance, they are used in advanced image recognition systems for tasks like tumor detection. These systems can process high-resolution images quickly, aiding doctors in making timely decisions. Additionally, FPGAs, with their low power consumption, play a role in portable medical devices, enabling remote patient monitoring without frequent charging. Overall, these advantages of FPGAs contribute to more efficient and effective healthcare solutions.

Automotive and Autonomous Systems

The automotive industry benefits immensely from the adaptable nature of FPGAs, especially for autonomous vehicles. These vehicles require robust real-time processing for tasks such as image recognition and decision-making. FPGAs can efficiently handle these tasks through digital signal processing and customizable hardware designs, ensuring safety and precision. Moreover, the power efficiency of FPGAs means they can support autonomous systems without the need for excessive energy, which is essential for prolonged journeys. By reducing development time, these programmable logic devices accelerate advancements in automotive technology, paving the way for safer and smarter vehicles.

Finance and Real-Time Data Analysis

In the finance sector, FPGAs offer a distinct edge for real-time data analysis. They excel in processing large sets of financial data quickly, providing valuable insights in seconds. This capability is critical for high-frequency trading where milliseconds can mean substantial financial gains or losses. FPGAs allow firms to implement customizable hardware solutions tailored to specific trading strategies, maximizing efficiency. Their range of applications includes risk assessment and fraud detection through deep learning applications. By leveraging FPGAs, finance companies can enjoy the dual benefits of speed and accuracy, setting themselves apart in a competitive market.

Practical Implementation Strategies

Field-programmable gate arrays (FPGAs) are transforming how artificial intelligence (AI) applications are developed and deployed. They offer customizable hardware designs, allowing developers to precisely tune resources for specific tasks. This results in increased performance efficiency across a wide range of applications. When working with FPGAs for AI, one must carefully consider factors such as power consumption, real-time processing needs, and the computational power required for the task at hand. By strategically planning the FPGA implementation, developers can achieve optimal performance for complex tasks like neural network inference and image processing. Below, we explore design and development tips, as well as tools and platforms critical to FPGA AI projects.

Design and Development Tips

Designing for AI on FPGAs demands a unique approach due to their programmable logic. Developers should start by identifying the type of AI applications, such as real-time image recognition or neural network inference. Understanding the computational power required and the power efficiency goals will guide the hardware implementation. Next, selecting appropriate configurable logic blocks and mapping out convolution layers can significantly reduce development time.

When it comes to wide-ranging AI applications, efficient hardware resources allocation is vital. Consider the specific needs of Digital Signal Processing and autonomous vehicles, which require rapid, real-time data processing. Balancing these needs with FPGAs’ power consumption capabilities is vital for maintaining efficiency and performance.

Finally, leveraging development tools that support FPGAs can streamline the design process. These tools assist in simulating neural networks on FPGAs and optimizing hardware solutions tailored to specific application needs. By following these design strategies, developers can make the most of FPGAs’ advantages in AI.

Tools and Platforms for FPGA AI Projects

Selecting the right tools and platforms is essential for the success of FPGA AI projects. Development platforms provide frameworks for implementing neural networks on FPGAs, ensuring the efficient use of hardware resources. These platforms often come with pre-defined libraries and modules that simplify the development process.

One of the most popular tools for FPGA development is Xilinx’s Vivado Design Suite. It provides comprehensive support for programmable gate arrays and aids in optimizing hardware designs for deep learning applications. Developers can use its advanced features to define convolution operations and real-time processing tasks with precision.

Altera’s Quartus Prime is another powerful tool, offering similar capabilities with an emphasis on reducing development time. It allows seamless integration of FPGAs into traditional central processing units (CPUs) and graphics processing units (GPUs) workflows, enhancing computational power for demanding AI tasks.

In summary, the judicious selection of tools and platforms not only aids in crafting sophisticated FPGA-based solutions but also ensures the efficient execution of AI projects across diverse sectors, from automotive applications to image processing. Through smart strategy and leveraging the right technology, FPGAs can significantly enhance AI performance and power efficiency.

Future Trends in FPGA and AI Technology

Field-programmable gate arrays (FPGAs) are transforming how artificial intelligence (AI) applications are developed and deployed. With the increase in demand for AI, FPGAs are gaining traction due to their flexibility and efficiency. They offer a wide range of applications from image recognition to autonomous vehicles. This flexibility makes them a valuable tool in the fast-evolving AI landscape. As we look to the future, FPGAs are expected to play an even larger role in real-time processing and low-power consumption scenarios. Their ability to support deep learning applications and neural network inference is pushing boundaries in AI technology.

Scalability and Customization

Scalability and customization have become crucial in AI as applications grow more complex. FPGAs shine in these areas due to their programmable logic and configurable logic blocks. They allow developers to tailor hardware resources to meet specific needs. This customization helps achieve high computational power while maintaining power efficiency. Unlike traditional central processing units (CPUs) or graphics processing units (GPUs), FPGAs can adjust to a range of applications, offering both flexibility and scalability. This means faster development time and easier adaptation to new AI challenges. As technology progresses, FPGAs will continue to support scalable and customized solutions for AI.

Innovations and Emerging Technologies

The landscape of AI and FPGA technology is constantly evolving, with new innovations around every corner. One emerging trend is the integration of FPGAs into real-time applications such as autonomous driving and digital signal processing. These innovations are driven by the need for hardware designs that can adapt quickly to changing demands. Convolution operations, a key part of neural network models, benefit greatly from FPGA enhancements. By optimizing field-programmable gate arrays, developers can improve real-time image processing and neural network inference. Additionally, cutting-edge development tools are making it easier to implement these advanced technologies. FPGAs are set to revolutionize not just AI, but a wide range of industries reliant on real-time, high-performance processing.